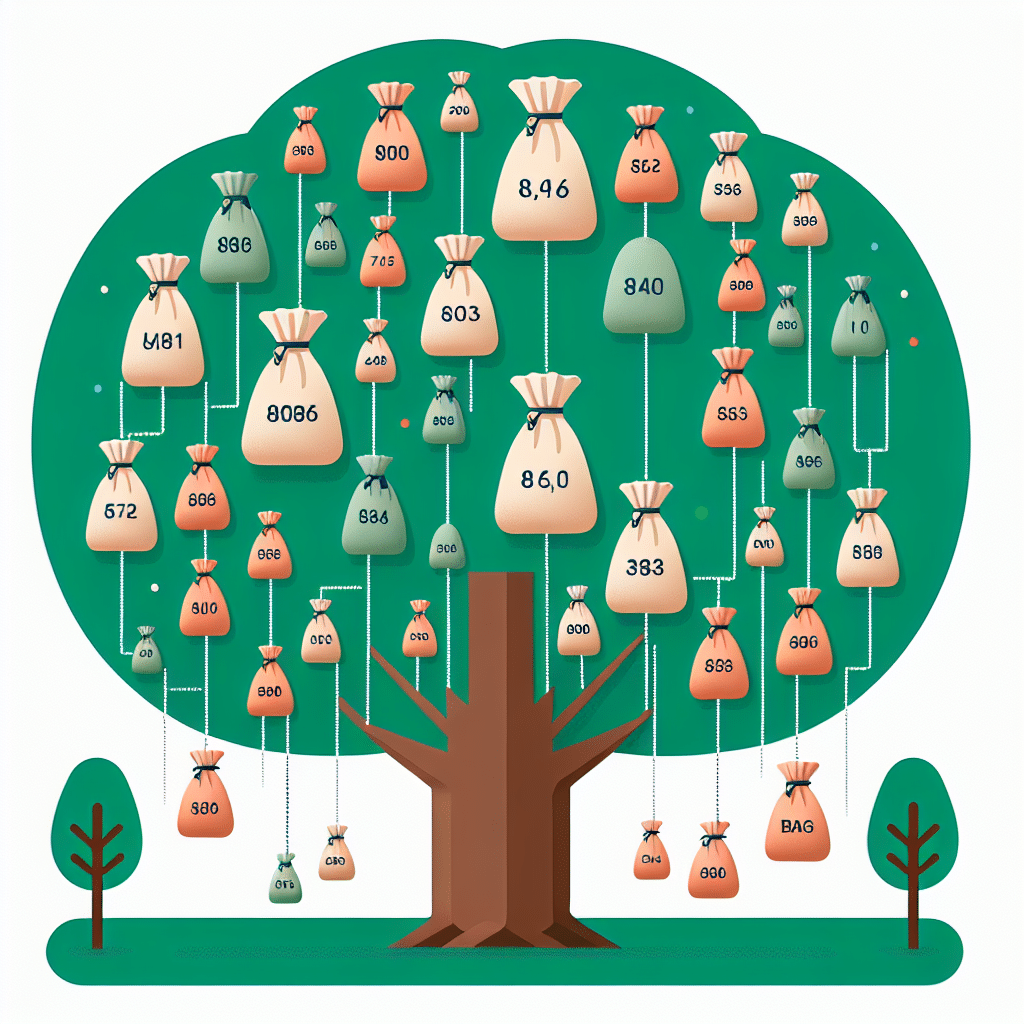

In the context of Random Forest, “bagsize” refers to the number of samples used when building each individual decision tree within the ensemble model. Each tree in a Random Forest is constructed from a random subset of the training data, which is determined by the bagsize setting. This approach is essential to the model’s ability to generalize well and avoid overfitting, as it introduces variability among the trees. Typically, bagsize is set as a fraction of the total number of training instances, often defaulting to 0.632, meaning approximately 63.2% of the available data is used for each tree while the remaining samples are reserved for validation. Adjusting the bagsize can significantly impact model performance, making it a crucial hyperparameter to tune through methods like cross-validation in order to achieve the best predictive accuracy.

Understanding Random Forest

Random Forest is a popular ensemble learning technique primarily used for classification and regression tasks. It combines multiple decision trees to produce a more robust and accurate predictive model. The key features of Random Forest include:

- Ensemble Learning: Random Forest builds multiple decision trees and merges them together to improve the overall performance.

- Random Sampling: The model utilizes bootstrapping to create diverse decision trees, ensuring that each tree is trained on a unique set of data.

- Feature Randomness: When splitting nodes in a tree, a random subset of features is considered, which helps in reducing correlation between the trees.

What is Bagsize in the Context of Random Forest?

Bagsize, often referred to as the bootstrap sample size, is a critical parameter when training a Random Forest model. It dictates how many samples from the original dataset will be used to build each individual decision tree. The primary aim of using a bagsize is to ensure that while each tree is trained on a subset of the data, there is sufficient variation amongst the trees to allow for a more generalized model. It is important to note the following about bagsize:

- Bootstrapping: The bagsize is typically derived from a bootstrapping technique, where samples are drawn with replacement from the dataset.

- Default Value: By default, the bagsize is often set to the size of the training dataset multiplied by 0.632. This configuration has been empirically shown to yield good results in practice.

- Impact on Performance: A larger bagsize may lead to better training of individual trees but can risk overfitting. Conversely, a smaller bagsize can introduce more variability but may underfit.

Choosing the Right Bagsize

Choosing the appropriate bagsize can be crucial for optimizing a Random Forest model. Here are several considerations and strategies to help you select the right bagsize:

1. Cross-Validation Techniques

Implementing cross-validation allows you to evaluate different bagsize settings effectively. By splitting your data into multiple subsets, you can observe how changes in bagsize influence model accuracy and stability.

2. Hyperparameter Tuning

Utilize grid search or randomized search methods for hyperparameter tuning. By systematically testing a range of bagsize values, you can identify the optimal configuration that yields the best performance.

3. Model Evaluation Metrics

Monitor relevant metrics such as accuracy, precision, recall, and F1 score during the evaluation phase. Tracking these metrics can guide you in understanding the effectiveness of different bagsize settings.

Impact of Bagsize on Overfitting and Underfitting

The relationship between bagsize and model performance is fundamentally tied to the concepts of overfitting and underfitting:

- Overfitting: When a bagsize is too large, the decision trees might learn too much noise from the training data, leading to overly complex models that fail to generalize to new data.

- Underfitting: Conversely, a very small bagsize may result in trees that do not capture the essential patterns of the dataset, consequently leading to poor predictive performance.

Expert Insights on Bagsize in Random Forest

Industry experts emphasize the importance of careful consideration of bagsize as part of a broader hyperparameter tuning process. The scalability and flexibility of Random Forest models make them highly applicable across various domains—from finance to healthcare. Organizations utilizing Random Forest algorithms must remain vigilant in periodically reassessing their bagsize configurations, as underlying data distributions can change over time.

Conclusion

In summary, bagsize plays a fundamental role in the construction and performance of Random Forest models. Understanding its significance and effect on model behavior is essential for practitioners looking to leverage this powerful machine learning technique. By utilizing methods such as cross-validation, hyperparameter tuning, and careful metric evaluation, one can optimize the bagsize to strike the right balance between model complexity and predictive accuracy.

Frequently Asked Questions

What is the optimal bagsize for Random Forest?

The optimal bagsize varies depending on the specific dataset and problem at hand but typically defaults to around 63.2% of the total training dataset. Ultimately, this parameter should be tuned based on performance metrics obtained through methods like cross-validation.

Can bagsize be equal to the total dataset size?

While it is technically possible to set bagsize equal to the full size of the dataset, this negates the benefits of bootstrapping and can lead to highly correlated trees, thus defeating the purpose of using an ensemble method.

How does bagsize affect the training time of a Random Forest model?

A larger bagsize may result in longer training times due to the increased volume of data being processed for each tree. Conversely, a smaller bagsize will often lead to quicker training but might compromise model performance.

Is it necessary to adjust bagsize during model tuning?

Yes, it is crucial to adjust bagsize along with other hyperparameters during model tuning to ensure optimal performance. Each dataset may respond differently to variations in bagsize, and adjustments can lead to significant improvements in the model’s predictive capability.

Further Reading and Resources

For more detailed insights into the mechanics of Random Forest and the importance of bagsize, consider reviewing the following resources: